Code review has always been a critical step in software development—but also one of the most frustrating. It’s where bugs are caught, standards are enforced, and quality is preserved. Yet it’s often slow, inconsistent, and burdensome, especially as engineering teams scale or deadlines tighten.

Early AI tools promised to help, but most fell short—offering autocomplete suggestions and syntax checks, but lacking true comprehension of code intent and architecture.

That’s now changing in a big way.

With Anthropic’s latest Claude Code capabilities, AI is stepping into a more meaningful role—augmenting how developers write, understand, and improve code. It’s not just about faster delivery; it’s about smarter collaboration, cleaner pull requests, and more efficient reviews.

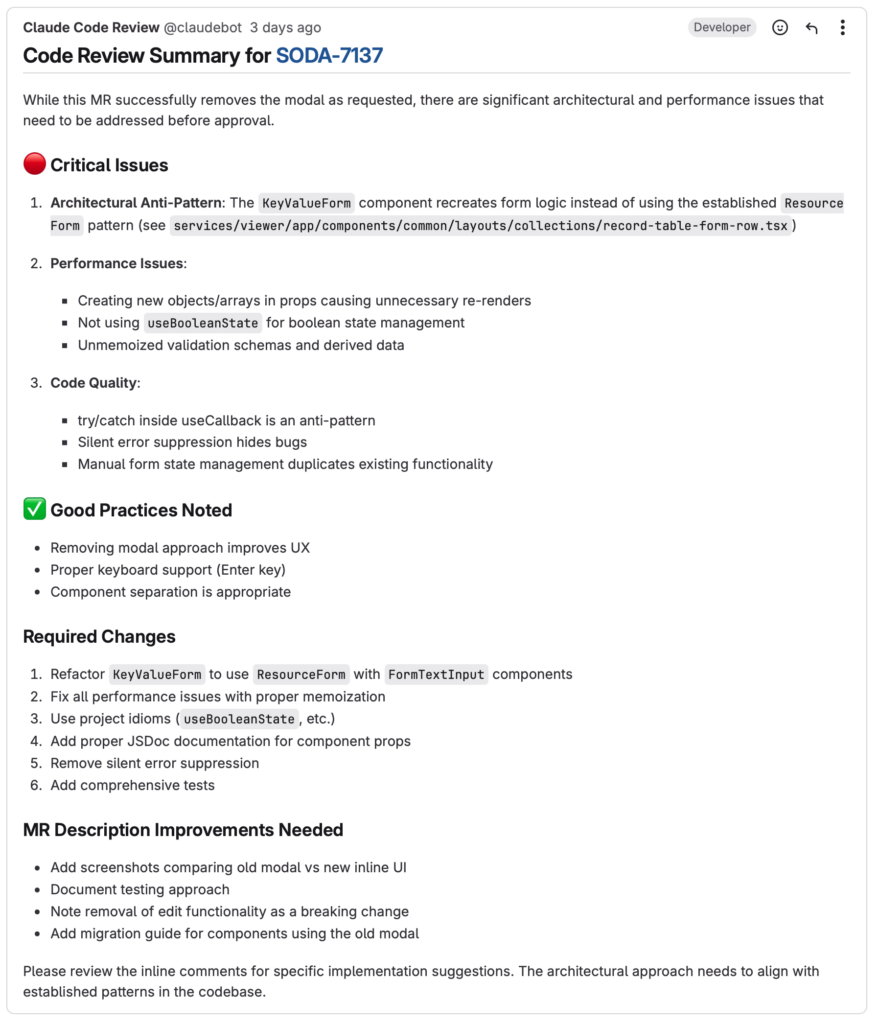

At CloudSoda, we’ve seen this transformation firsthand. Claude Code plays a central role in how we handle Merge Requests in GitLab—reading Jira tickets to understand the goal and acceptance criteria of each change, then providing inline feedback and overall commentary on the proposed code. It helps us catch issues early, improve code quality, and streamline human reviews.

And while this post focuses on code review, Claude Code is also showing promise in other areas of the development lifecycle—from early-stage prototyping to simple feature implementation. We’ll share more on that in future posts.

Let’s take a closer look at what’s been broken in traditional code review, what’s being fixed, and where this all goes next.

The Real-World Pain of Human Code Review

Code review, in theory, should elevate the entire engineering process. But in reality, it’s full of friction:

- Time-Consuming: In busy teams, reviews can sit in queues for days. Reviewers juggle competing priorities, and submitters are left waiting.

- Subjective and Inconsistent: Feedback varies wildly by reviewer. Some nitpick naming conventions; others miss logic flaws. Standards drift over time.

- Mentally Draining: Reviewing dozens of lines of code takes sustained attention and deep context—especially difficult when switching between projects or domains.

- Psychologically Tense: For junior engineers, review cycles can feel like judgment. For seniors, it’s another time-consuming task on an already full plate.

When done well, code review adds huge value. But done poorly—or rushed—it becomes a blocker and a burden.

The First Wave of AI: Useful but Shallow

AI tools like GitHub Copilot, static analyzers, and automated linters made developers faster at writing code. They suggested completions, pointed out syntax issues, and helped enforce style guides.

But when it came to actual review—critical thinking, performance awareness, architectural fit—they missed the mark:

- No Deep Understanding: They evaluated code line-by-line, not as part of a larger system.

- Limited Scope: They couldn’t interpret business logic or assess tradeoffs.

- Lack of Conversation: Developers had no way to ask follow-ups, explore alternatives, or dig deeper into why a suggestion was made.

They were fast, but not thoughtful.

Anthropic’s Claude Changes the Equation

Anthropic’s latest version of Claude Code moves past these limitations and into true AI collaboration. It doesn’t just scan code—it understands it.

Here’s what Claude brings to the table:

- Semantic Analysis: Claude interprets logic and intent, surfacing subtle bugs or inefficiencies that would otherwise slip through unnoticed.

- Pull Request Summaries: It can read and summarize entire MRs with natural language explanations of what changed, why, and what to watch for.

- Interactive Conversations: Developers can ask Claude “Is there a risk of concurrency issues here?” or “What’s a better approach?” and get nuanced responses.

- Refactoring Suggestions: Rather than just flagging problems, Claude proposes real solutions—complete with rationale and alternatives.

- Cross-File Awareness: Claude sees the broader context—understanding how changes impact other files, modules, or workflows.

- Jira-Aware Reviews: Claude Code can read the Jira ticket description to understand the goal and acceptance criteria of the Merge Request, helping ensure the submitted code aligns with the original intent. It then provides both inline feedback and general commentary on how well the changes meet those requirements.

It’s like having a senior engineer on demand—one who works fast, never tires, and gives consistent, thoughtful feedback every time.

The Business Case: It’s Not Just About Developer Speed

For engineering leaders and product teams, the value of Claude Code goes far beyond faster merges.

- Higher-Quality Code

Claude identifies issues early—before QA or production. That means fewer bugs, better architecture, and more secure, performant releases.

- Faster Time to Market

Code review stops being a bottleneck. MRs move faster, teams iterate more, and velocity increases without sacrificing quality.

- Happier, More Engaged Engineers

Developers spend less time on redundant review cycles and more time on creative, high-impact work. That’s a recipe for lower attrition and stronger team culture.

- More Scalable Processes

AI review levels the playing field. It reinforces standards, offers consistent guidance, and helps junior devs level up without overburdening senior staff.

- Better Collaboration Across Teams

Natural language explanations mean non-developers—product managers, QA, even legal—can understand what’s changing and why.

How CloudSoda Uses Anthropic to Build Smarter, Ship Faster

At CloudSoda, we’ve made Anthropic’s Claude Code capabilities a standard part of our development pipeline—and the benefits have been immediate.

We use Claude to:

- Spot logic issues in context, especially in our web application.

- Flag potential performance concerns before they cause slowdowns at scale.

- Suggest improvements in code quality that lead to more maintainable, secure, and elegant solutions.

- Raise the bar on pull requests—before they even reach human reviewers, leading to cleaner, more thoughtful submissions.

And while our primary focus today is code review, we’re also exploring other use cases—like using Claude Code to prototype implementation concepts during product discovery, or generate small code snippets for bug fixes and well-scoped third-party integrations. We’ll dig into those topics in upcoming posts.

Importantly, we still maintain a human-in-the-loop review process. A senior engineer always performs the final code review, ensuring that the AI’s suggestions are evaluated in the context of overall system design, product priorities, and team standards.

The result? Fewer bugs, faster iterations, and more time for our engineers to focus on building features that deliver value to our users.

Where AI Code Development Is Heading Next

We’re just scratching the surface of what AI can do for software engineering. Over the next few months and years, expect major leaps in capability and integration:

- Automated Threat Modeling and Security Review: AI tools won’t just find bugs—they’ll assess vulnerabilities and recommend fixes in real time.

- Architecture Guidance: AI will help with system-level designs, identifying anti-patterns and suggesting more scalable alternatives.

- Full-Stack Context Awareness: Tools will evaluate changes holistically—frontend, backend, infrastructure, and CI/CD pipelines.

- Teamwide Code Coaching: AI will analyze codebase patterns to identify team-level issues and recommend improvements or training.

- Continuous Review Models: PR-based workflows may give way to real-time, AI-driven linting and feedback within your IDE.

Most exciting of all? These tools won’t replace developers—they’ll amplify them. Tomorrow’s best engineers will be those who collaborate seamlessly with AI to build faster, smarter, and better.

Final Thoughts

AI-assisted code review has moved from novelty to necessity. Tools like Anthropic’s Claude Code are redefining how development teams work—not by replacing people, but by amplifying their capabilities.

For fast-moving teams trying to build high-quality, scalable software, the question isn’t if you should adopt AI in your development process—it’s how soon you can.

At CloudSoda, we’ve seen the impact firsthand: better code, faster delivery, and a happier, more focused engineering team.

Curious how CloudSoda blends AI into both our product and our process?

👉 Schedule a demo and see how our platform combines intelligent data management and AI-powered development to accelerate innovation—across your edge, core, and cloud.